If the source file is not available then ETL execution will fail. For avoiding this failure, we can implement a task to check the source file existence, based on source file availability we can take further action.

In this post i am going to explain 'Source file existence check' in two ways

- Using 'Script task'

- Using 'Foreach Loop container'

Using Script task:

Step 1:

- Create the below three variables

- Name: SourceDirectory Data type: String Value: D:\Source\ (Source directory)

- Name: SourceFileName Data type: String Value: Customer.txt (Source file name)

- Name: FileExistence Data type: String Value: False

Step 2:

- From Toolbox drag and drop 'Script task'.

- Edit the script task and select ScriptLanguage as "Microsoft Visual Basic". Select 'SourceDirecotry' and 'SourceFileName' variables in the "ReadOnlyVariable" list. Select 'FileExistence' variable from the "ReadWriteVariables" list.

- Click on "Edit Script..." button. Vb script will open, used the below script to check source file availability.

dim

FullPath = Dts.Variables("User::SourceDirectory").Value.ToString()

+ Dts.Variables("User::SourceFileName").Value.ToString()

If System.IO.File.Exists(FullPath) Then

Dts.Variables("User::FileExistence").Value = "True"

Else

Dts.Variables("User::FileExistence").Value = "False"

End If

- Save the script. Close the 'Integration Service Script Task' window.

- Click on 'Script Task Editor' window 'OK' button.

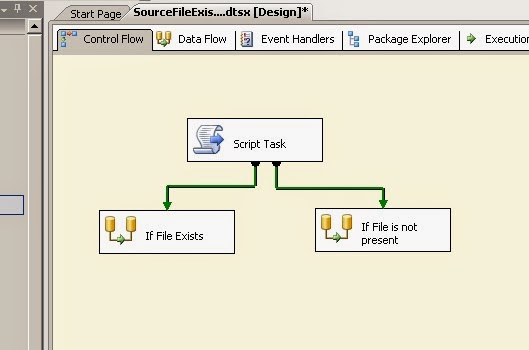

- From Toolbox drag and drop two 'Data flow tasks'. 1st task will run if source file is present. If source file is not present second task will run. For this process i am going to write expressions.

- Connect the Script task green arrows (precedence constraint) to the Data flow tasks.

Step 3:

- Right click on the first 'Precedence Constraint' and select 'Edit' option.

- 'Precedence constraint Editor' window will open.

- Select 'Expression and Constraint' from the 'Evaluation operation' drop-down list.

- Writhe the expression @[User::FileExistence] == "True" in the 'Expression' text box.

- Same way write expression (@[User::FileExistence] == "False" ) for second 'Precedence constraint'

Step 4:

- Now the Package is ready. Source file is present, so if i run the package, first 'Data flow task' should run.

- In the above screen we can see that the first 'Data flow task' is executed.

- Now i have removed the source file. If i run the ETL second 'Data flow task' should run.

- In the above screen we can see that the second Data flow task is executed. So source file is not present.

Using Foreach Loop container:

Step 1:

- Create a variable

- Name: FileExistence Data type: String Value: False

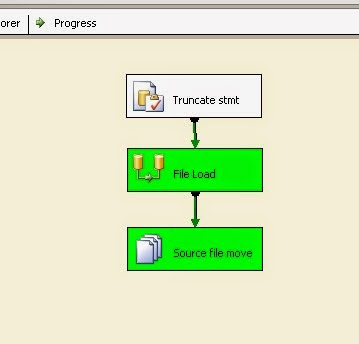

- Drag and drop "Foreach Loop container" from the Toolbox.

- Edit the "Foreach Loop container". Go to 'Collection'.

- Select 'Foreach File Enumerater' from the 'Enumerator' drop-down list.

- Give the "Folder path" and File name.

Step 3:

- Go to Variable mapping. Map the 'FileExistance' Variable.

- Click on 'OK' button.

- Drag and drop two data flow tasks from the Toolbox.

- Connect the "Foreach loop container" green arrow to the data flow tasks.

- Right click on the first 'Precedence Constraint' and select 'Edit' option.

- 'Precedence constraint Editor' window will open.

- Select 'Expression and Constraint' from the 'Evaluation operation' drop-down list.

- Writhe the expression @[User::FileExistence] != "False" in the 'Expression' text box.

- Same way write expression (@[User::FileExistence] == "False" ) for second 'Precedence constraint'

Step 4:

- Now the Package is ready. Source file is present, so if i run the package, first 'Data flow task' should run.

- In the above screen we can see that the first 'Data flow task' is executed.

- Now i have removed the source file. If i run the ETL second 'Data flow task' should run.

- In the above screen we can see that the second Data flow task is executed.